Website Audit

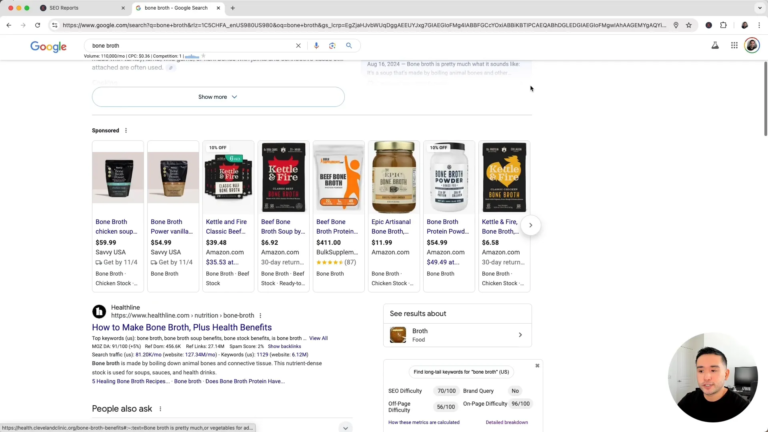

Below table shows a summary of the main SEO factors to looked at when auditing a website.

| SEO Factor | Best Practice | Observed |

| Page Titles | Include Keyword but make it appealing to click from SERP and keep within 60 characters | |

| Meta description | Have a unique meta description for each page with up to 160 characters. See other best practices below. | |

| Meta Keywords | 1. Select 5 to 8 keyphrases that reflect the theme or context of the page.2. Use different phrase variants including plurals, misspellings and synonyms. | |

| Content Text | Aim for 200 to 400 words with a keyword density of 5-10%. | |

| Graphic alt text | Include alt tag for any images. | |

| Sitemap | Google sitemaps can potentially help you include a higher proportion of your pages in the index and potentially enable you to notify Google of changes | |

| Robots.txt file | Use Robots.txt to control indexing of pages by search engines. |

Best practice with Meta descriptions

1. Create a unique meta description for every page where practical or have meta description automatically generated to include the product keyword name plus a summary of the value proposition of the site.

2. Create a powerful meta description that combines a call-to-action and encourages click-through on SERPs.

3. Try to keep within 15-20 words (160 characters) which is what is visible within the SERPS of Google.

4. Limit to 2-4 keyphrases per page.

5. Do not use too many keywords or use too many irrelevant filler words within this meta tag since this will reduce keyword density.

6. Avoid undue repetition – 2 to 3 times maximum, otherwise may be assessed as spamming.

7. Incorporate phrase variants and synonyms within copy.

8. Vary words on all pages within site.

9. Make different from <title> tag since this may be a sign of keyword stuffing.

Content

Best practice for page content is:

- Have 200-400 words with a keyword density of 5-10%.

- Include a range of synonyms and Latent Semantic Indexing (LSI) as well as identical keyphrases and Vary use of keywords in target keyphrases- i.e. They don’t have to be in a consecutive phrase.

- Good practice is to include the phrase towards the top of the document and then regularly through the document and again towards end of the document with possible hyperlinks of where to go for more information.

The robots.txt file is used to exclude pages from being indexed and to reduce bandwidth of crawlers. Example pages to exclude are outdated pages, shopping cart and broken links.

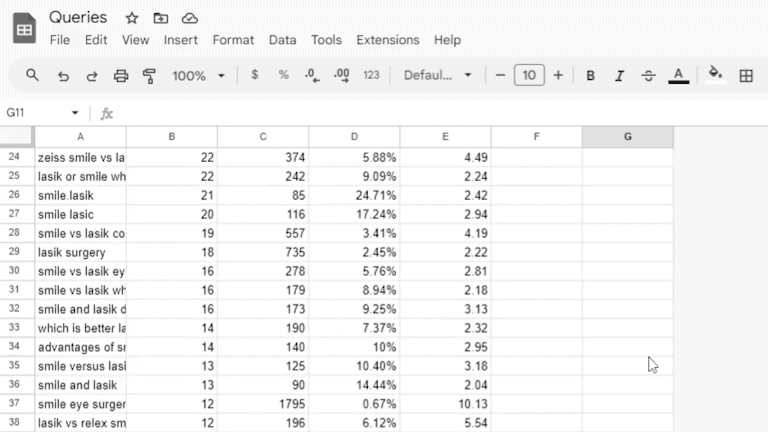

Amendments Audit Trail & Monitoring

It is useful to have an audit trail and approval process of on-page amendments alongside monitoring to make sure the desired effect has taken place in ranking the site.

The bst method I have found is to set up a spreadsheet with a different sheet for each page to be amended as follows:

1) Create a list of pages that need amending and agree which web pages it is worth spending time on- i.e. they are not discontinued product pages.

2) Create a separate excel page for each website page that needs to be amended showing current content and new content to be uploaded as per below.

3) Get sign off for amendments to be done and note date page amended.

4) Add amended page to keywords watch list so that effects can be tracked